Bufferbloat: Difference between revisions

No edit summary |

No edit summary |

||

| Line 28: | Line 28: | ||

A file upload is not very latency sensitive so the fact that a packet may site in a buffer for 500ms waiting to be sent is not an issue. | A file upload is not very latency sensitive so the fact that a packet may site in a buffer for 500ms waiting to be sent is not an issue. | ||

However, lets assume you start a VOIP call at the same time. A VOIP call won't actually send much data as audio is small but it is very latency sensitive. | However, lets assume you start a VOIP call at the same time and lets render the VOIP packets in purple. A VOIP call won't actually send much data as audio is small but it is very latency sensitive. | ||

[[Image:bufferbloat_diagram_2.png|center|frame|VOIP call suffering from bloat]] | [[Image:bufferbloat_diagram_2.png|center|frame|VOIP call suffering from bloat]] | ||

Revision as of 03:54, 22 March 2017

Untangle can help you deal with bufferbloat related performance issues.

What is Bufferbloat?

Bufferbloat is latency introduced by excess buffering in networking equipment.

But wait! Aren't buffers a good thing?

Yes, buffers have many great properties. They help us maximize throughput and deal with fluctuations in bandwidth. However, in some circumstances they can also introduce latency and they can do so unfairly. This can reduce performance of many latency sensitive applications.

Bufferbloat in action

Lets take a closer, very simplified, look at how this happens. Lets assume a fairly simple setup with a client sending a big file to a server on the internet with a modem using a large FIFO (first-in-first-out) send buffer.

Bufferbloat is a property of the system as a whole, but usually the the actual "bloat" occurs where the bandwidth goes from a large amount to a small amount. This is because this is where the buffers fill up. For example, assume your have a local gigabit network, and a 100Mbit conneciton. In this case, one one side of the mode there is gigabit, and the other side as 100Mbit connection. Because of this, when uploading a large file, packets can come in faster than they can be sent, causing the send buffer to fill up.

If there is only one connection, a TCP session to upload the file rendered in green, then all is fine. The buffer will fill up, but the data will be sent as quickly as possible on the 100Mbit connection. A file upload is not very latency sensitive so the fact that a packet may site in a buffer for 500ms waiting to be sent is not an issue.

However, lets assume you start a VOIP call at the same time and lets render the VOIP packets in purple. A VOIP call won't actually send much data as audio is small but it is very latency sensitive.

The problem is that now when the VOIP client sends a packet, the packet gets added to the end of the buffer behind all the existing data queued to be sent. As it waits in the queue, this will introduce severe latency. Now the 500ms queue wait time will absolutely destroy the VOIP call quality.

In the real world the effect of this is that latency will tend to get very high when a lot of bandwidth is in use.

You can read a lot more about the issue at bufferbloat.net and even test your connection's current performance at dslreports.

How to fix bufferbloat

There are much better/fairer queueing algorithms than the simple FIFO queue used above. Some queueing algorithms (or "queue disciplines" as they are called) have been developed to solve bufferbloat latency. These mainly work using two tactics, using a single queue per connection (or a large number of them and a hash function to spread usage) and controlled delaying of certain packets.

Using the example above, there may be much data queued to be sent, but when a VOIP packet comes in - it will not go onto the end of the existing queue for the file upload. It will go immediately to the front of its own queue and be sent very quickly. The VOIP connection is not "starved" by the file upload. In this world, you get both maximum bandwidth and good latency.

Read a great explanation of queue disciplines here.

Improving bufferbloat is simple - just log into your modem or the device where queueing typically happens in your network connection, and enable the "fq_codel" queue discipline. Unfortunately, you can't do much about the devices used elsewhere in your internet connection like the networking equipment on the other end in your ISP.

The problem arises when your modem or network equipment does not support any intelligent queue discipline. Unfortunately this is my case, and is usually the case.

How Untangle can help!

If Untangle sits in the network path where it goes from a large amount of bandwidth to a small amount of bandwidth, then simply go into Config > Network > Advanced > QoS and change Queue Discipline to Fair/Flow Queueing + Code (fq_codel) and hit save. Thats it - you're done!

However, Untangle usually sits directly behind a modem device or some sort and not where the actual queueing takes place.

In the diagram above you can see the queue filling up in the modem. They will remain mostly empty on Untangle because the packets can be sent as quickly as they can be received. As such the queue discipline on Untangle can not help you because the modem will still introduce bloat by using a FIFO queue.

Fear not - there is still hope!

You can enable QoS limits to artifically limit the WAN speed on Untangle so that it is *slightly* less than the actual speed the modem gets. In other words, Untangle is not (artificially) where the step-down in bandwidth occurs.

By using QoS to limit the WAN speed to slightly less than the actual speed, the buffering now occurs on Untangle device itself AND it also allows QoS and Bandwidth Control prioritization to work!

To get this configured simple enable QoS, configure the WAN download and upload speeds of the WAN to be slightly less than actual, and then configure the Queue Discipline to Fair/Flow Queueing + Code (fq_codel).

With this setup, the queueing all takes place on Untangle and done fairly to minimize latency and maximize throughput!

Real word example

In my case, I have 100Mbit Comcast cable connection with a DOCSIS 3.0 modem. With this setup my buffer bloat score is quite bad. My connection is great, but if I try to download a large file on bittorrent, my skype video performance becomes terrible.

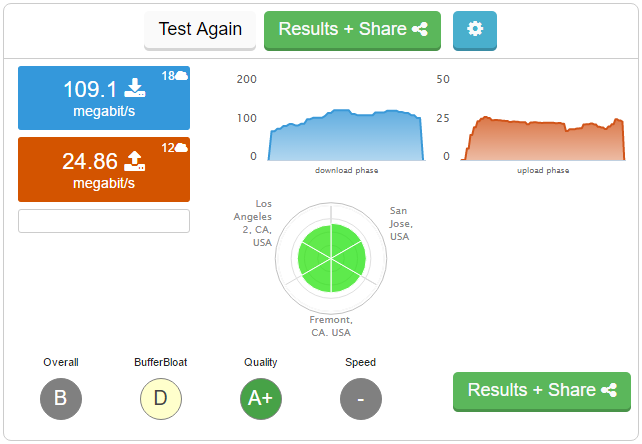

Using the dslreports.com test shows my connection has a lot of bufferbloat:

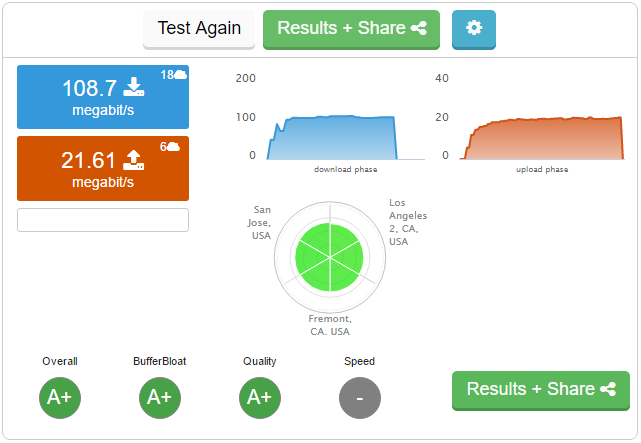

In the real world I get a maximum of 125Mbit download and around 24 Mbit upload. As such I set my QoS WAN limits to 120Mbit down and 22Mbit up. This ensure that the actual queueing happens on Untangle (and also allows Bandwidth Control prioritization!)

With this configuration I will lose a tiny bit of bandwidth, but my latency during usage is much much lower.

Success!

Another great thing about this setup is that when using both QoS and fq_codel, each prioritization of QoS and Bandwidth Control gets its own separate fq_codel queueing mechanism. This means I can configure my Bandwidth Control rules to prioritize highly latency sensitive applications.

This provides two layers of protections from bloat in that these applications are separated from lower priority applications and also fq_codel will protect from bloat even amongst other traffic of the same prioritization!